北京宇航系统工程研究所论文丨面向基于事件的SLAM算法的事件累加器设置研究

感谢北京宇航系统工程研究所肖昆供稿

1

基本信息

2

论文内容简介

事件相机是一种不同于传统相机的新型传感器。每个像素都是由事件异步触发的。触发事件是在像素上照射的亮度的变化。如果增量或减量高于某一阈值,则输出一个事件。与传统相机相比,事件相机具有动态范围高、无运动模糊的优点。把事件累计成事件帧并采用传统SLAM算法是一种直接有效的基于事件的SLAM方法。

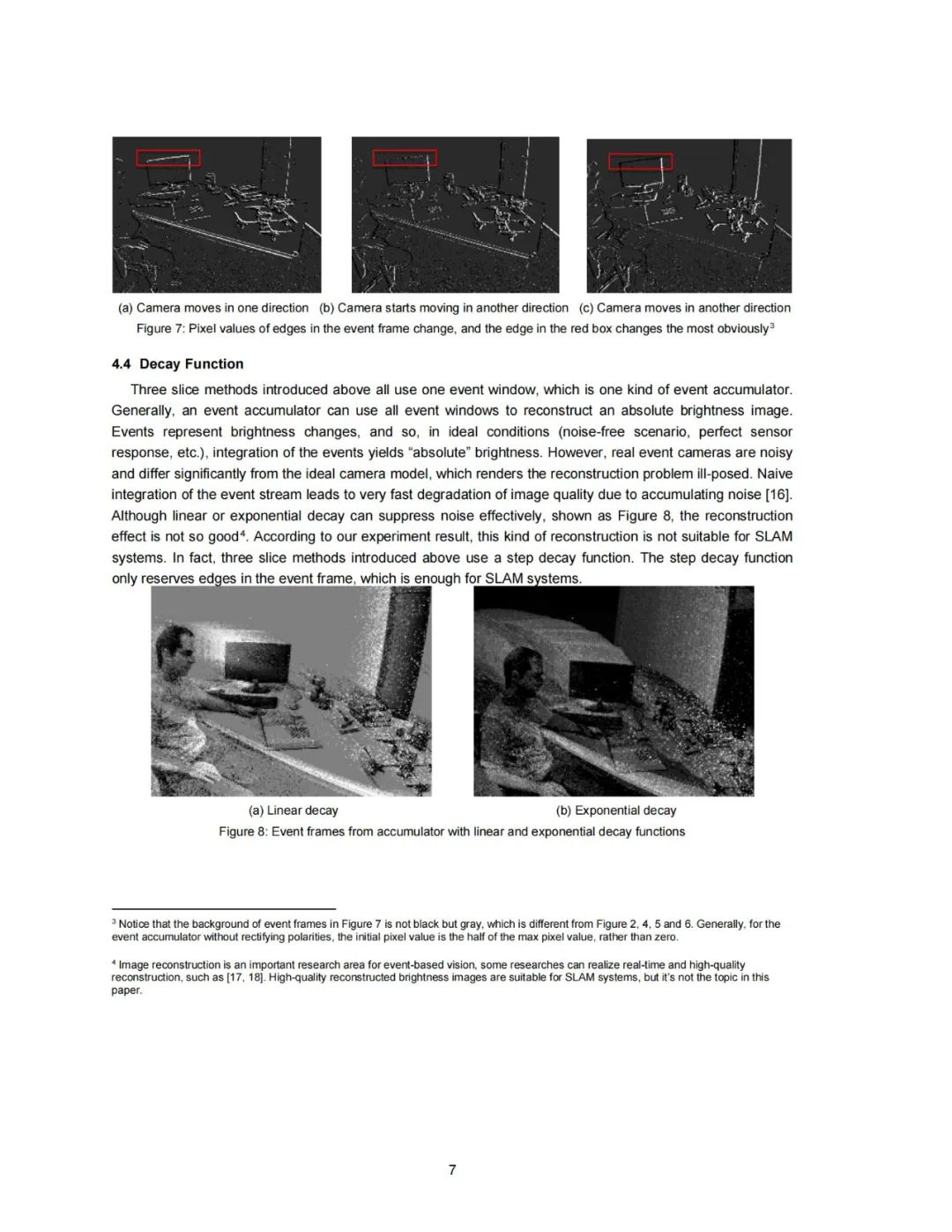

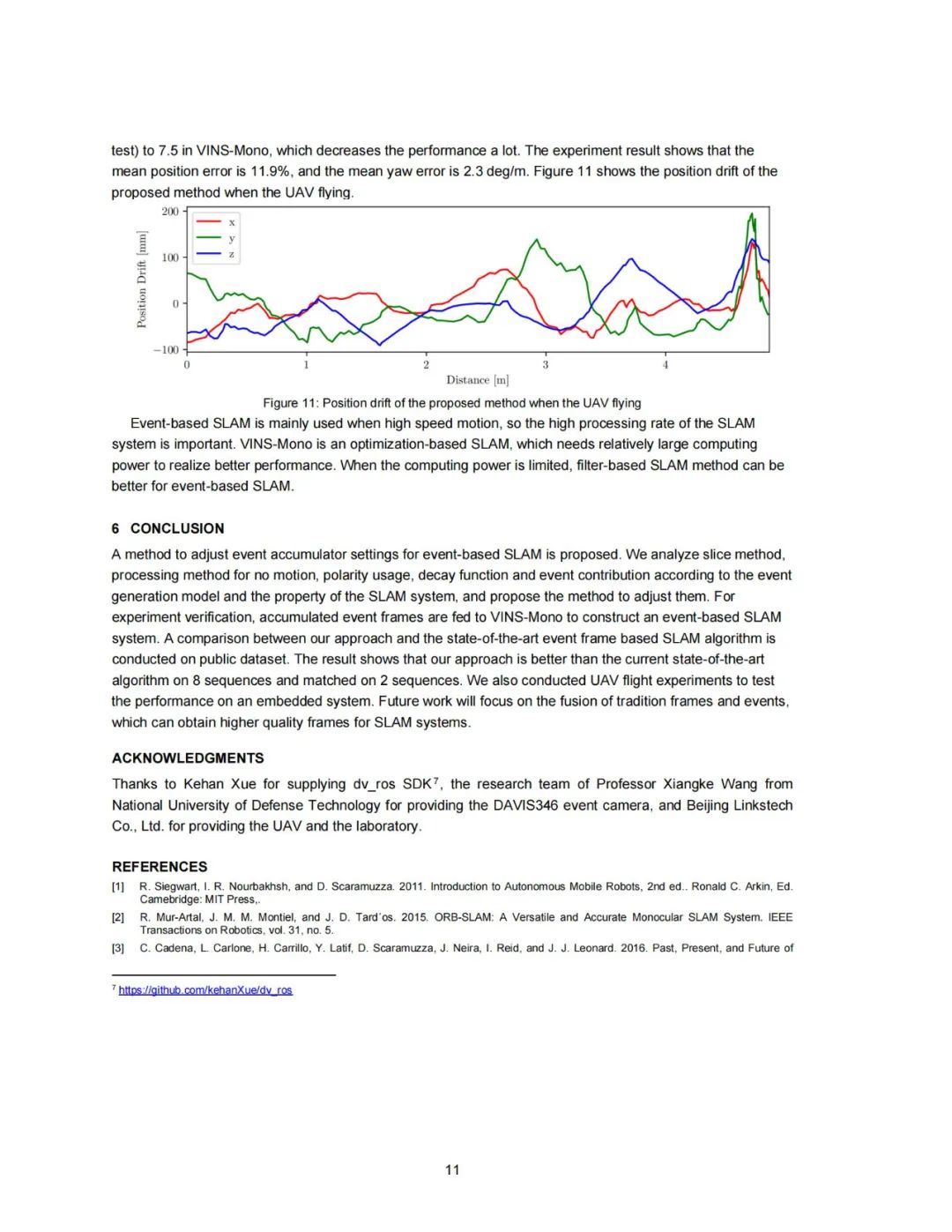

本文对用于事件相机SLAM的事件累加器设置进行了研究,分析了切片方法、无运动处理方法、是否使用极性、衰减函数和事件贡献度对SLAM精度的影响。为了进行实验验证,将累加的事件帧输入到传统的SLAM系统中,构建一个基于事件的SLAM系统。在公共数据集上测试了事件累加器的设置策略,结果表明,与以往的研究相比,该方法在大多数数据集上都取得了更好的性能。为了展示我们的方法在一个真实的场景中的潜力,我们在一个四旋翼无人机上运行了我们的方法。研究代码和结果是开源的,以便于其他开发者使用。

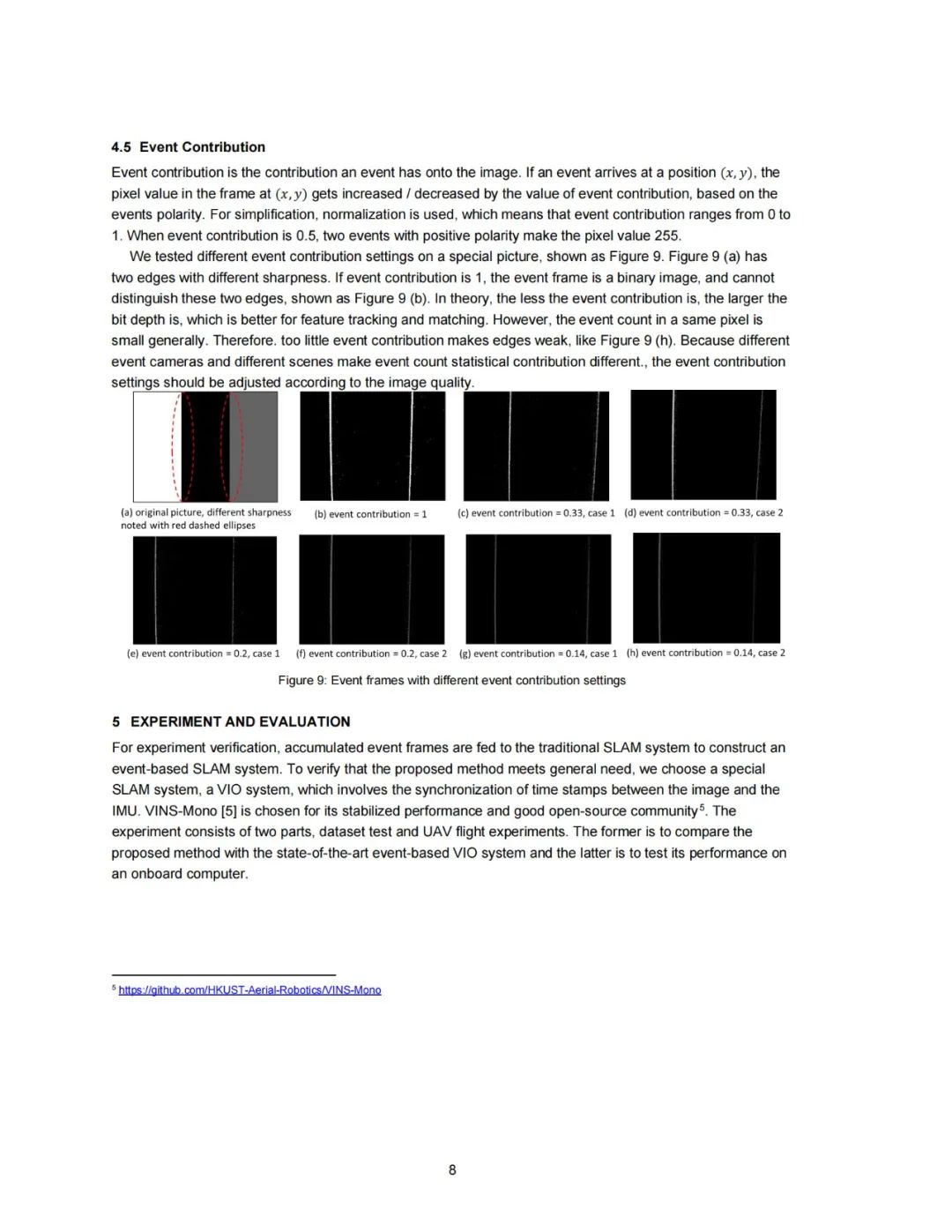

https://gitee.com/robin_shaun/event-slam-accumulator-settings

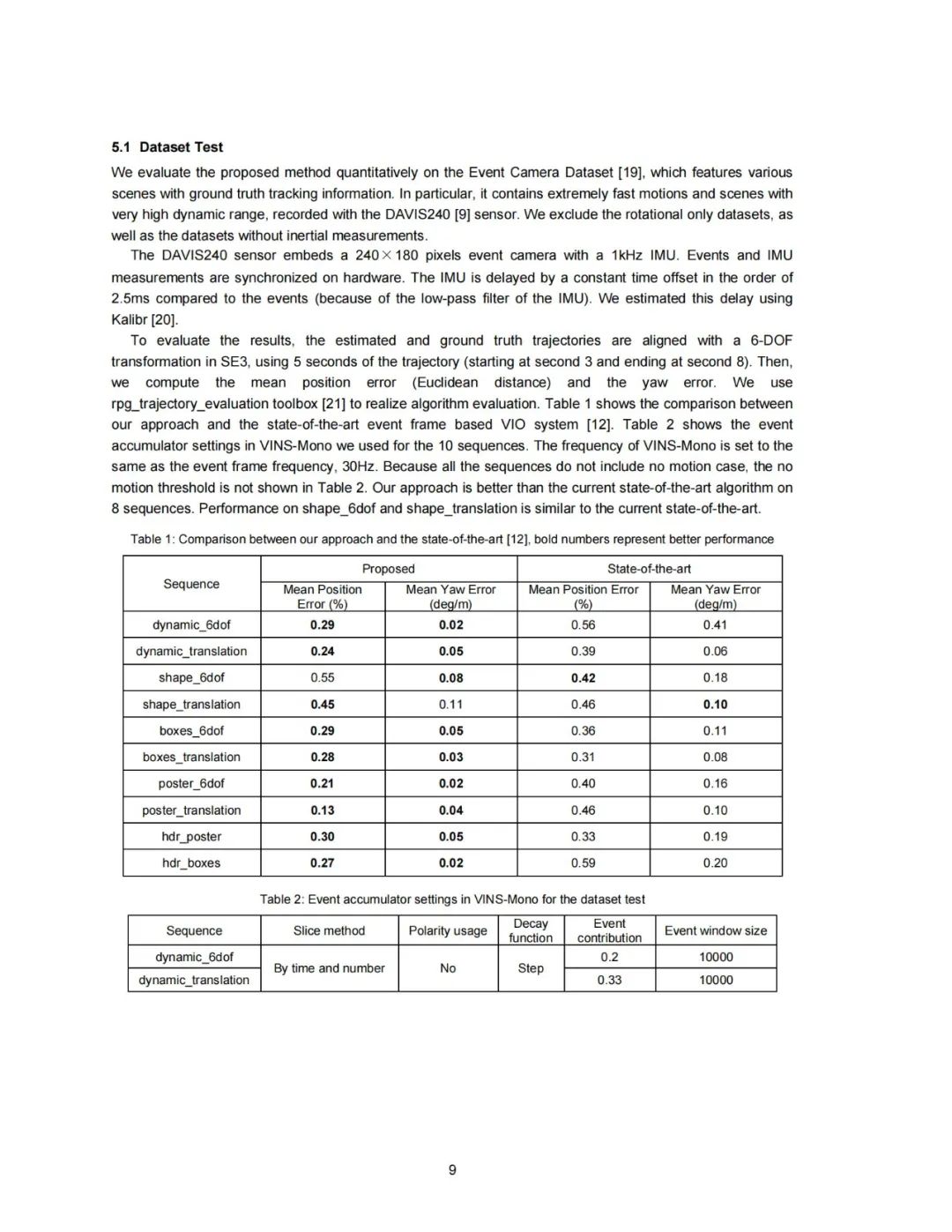

Research on Event Accumulator Settings for Event-Based SLAM

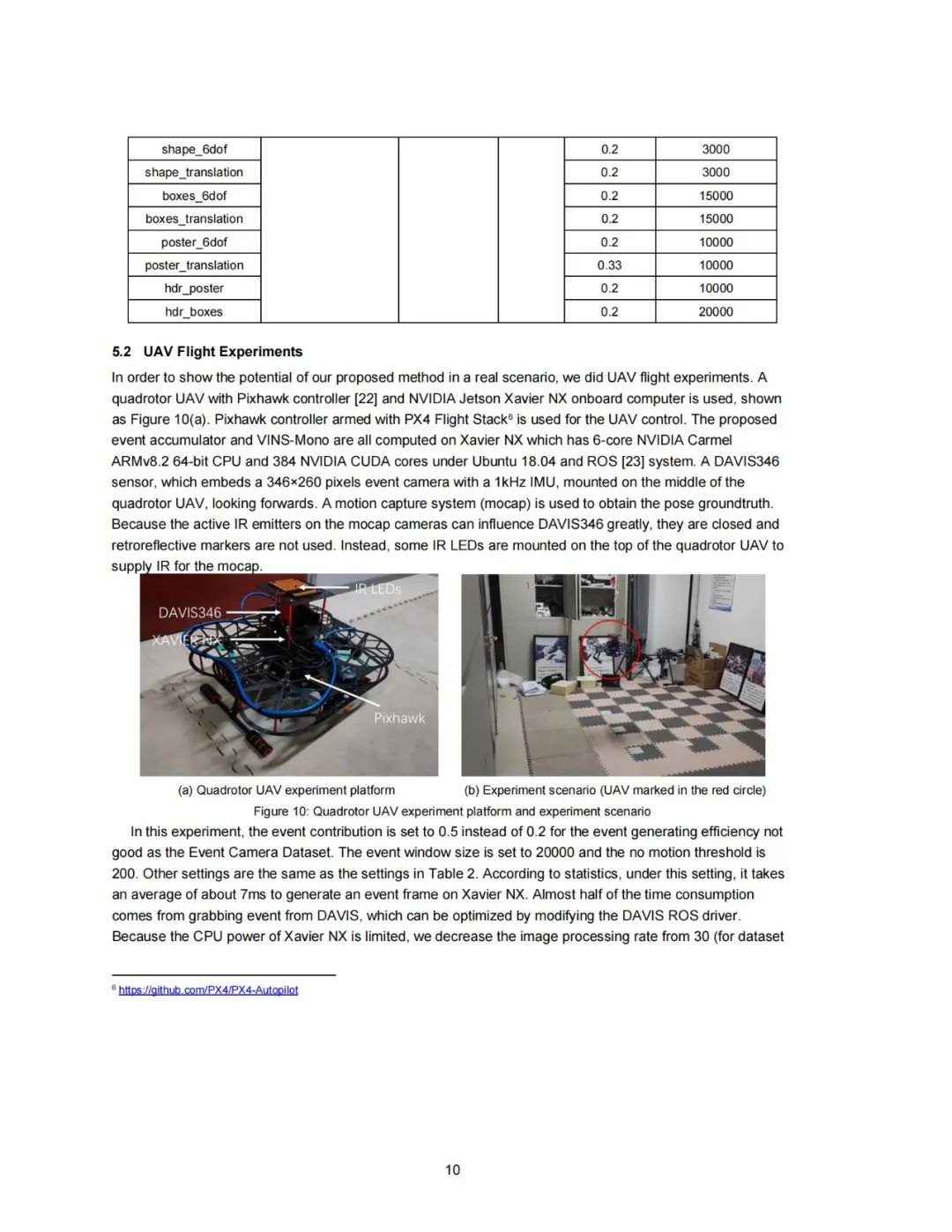

This is the source code for paper "Research on Event Accumulator Settings for Event-Based SLAM". For more details please refer to https://arxiv.org/abs/2112.00427

1. Prerequisites

See dv_ros and VINS-Fusion

2. Build

cd ~/catkin_ws/src

git clone https://github.com/robin-shaun/event-slam-accumulator-settings.git

cd ../

catkin_make # or catkin build

source ~/catkin_ws/devel/setup.bash

3. Demo

We evaluate the proposed method quantitatively on the Event Camera Dataset. This demo takes the dynamic_6dof sequence as example.

First, start dv_ros. Notice that the event accumulator depends on the timestamp, so when you restart the dataset or davis driver, you should restart dv_ros.

roslaunch dv_ros davis240.launchAnd then, start VINS-Fusion

roslaunch vins vins_rviz.launchrosrun vins vins_node ~/catkin_ws/src/VINS-Fusion/config/davis/rpg_240_mono_imu_config.yaml

Finally, play the rosbag

cd ~/catkin_ws/src/event-slam-accumulator-settings/dataset

rosbag play dynamic_6dof.bag

Notice that the default frequency of VINS-Fusion is the same as the event frame frequency, 30 Hz. If your CPU is not strong enough, maybe you should decrease it to 15 Hz in this file by uncommenting the code. However, this will decrease the performance as well.

// if(inputImageCnt % 2 == 0)

// {

mBuf.lock();

featureBuf.push(make_pair(t, featureFrame));

mBuf.unlock();

// }

4. Run with your devices

We have tested the code with DAVIS240 and DAVIS346. If you want to run with your devices, you should use rpg_dvs_ros. The most important thing to do is calibrating the event camera and imu. We advise to use Kalibr with traditional image from APS and IMU, because the intrinsics and extrinsics are almost the same for APS and DVS.

If you want to compare the event-based VINS Fusion with traditional VINS Fusion with DAVIS346, you should use this code. Because the frame from APS of DAVIS346 sometimes changes the size, we do some modification for VINS-Fusion.

5. Run with ORBSLAM3 for Stereo Visual SLAM

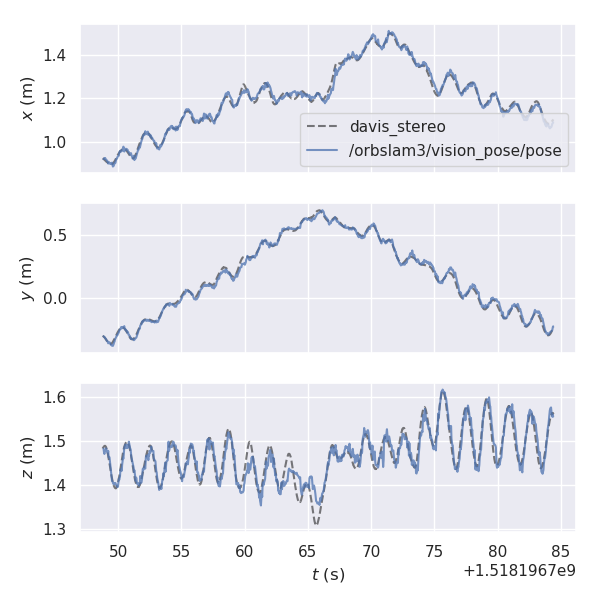

Event frame based stereo visual SLAM is not introduced in the paper. We use ORBSLAM3 to process the event frames from dv_ros. The result shows that the proposed method performs better than ESVO by computing absolute trajectory error (RMS, unit: cm), using Python package for the evaluation of odometry and SLAM.

| Sequence | Proposed | ESVO |

|---|---|---|

| monitor | 1.49 | 3.3 |

| bin | 2.66 | 2.8 |

| box | 3.51 | 5.8 |

| desk | 3.14 | 3.2 |

Event window size: 15000, Event contribution: 0.33

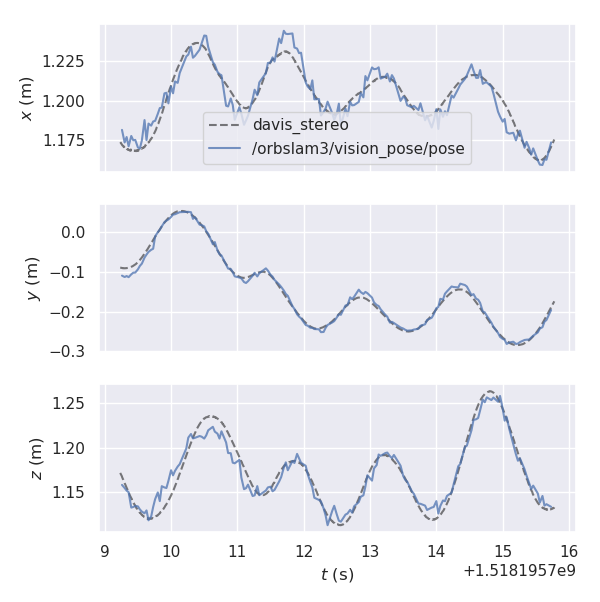

monitor

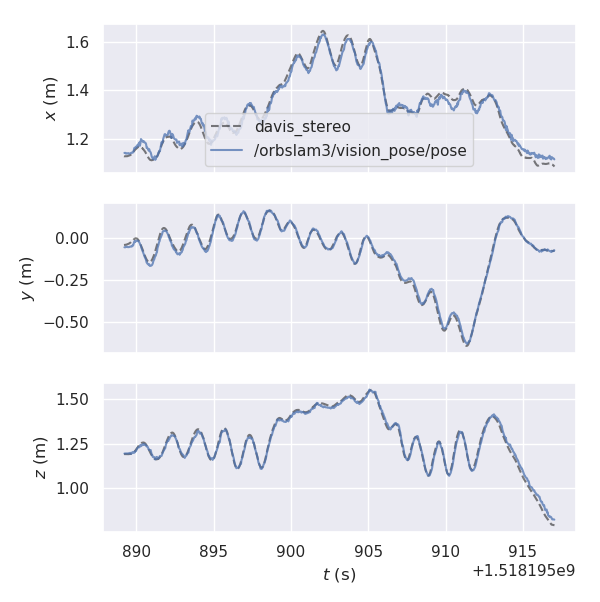

bin

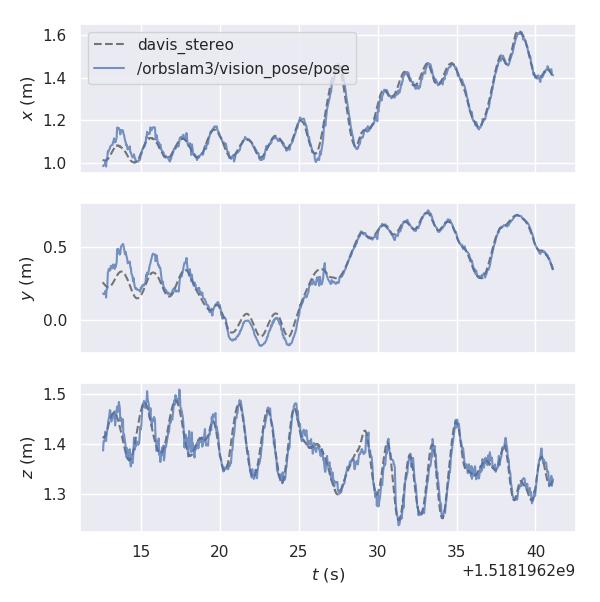

box

desk

First compile ORBSLAM3 with ROS according to this. And then you can use this script to run ORBSLAM3, which subscribes event frames and publish estimated poses (We modify ORBSLAM3 a little to publish estimated poses).

6. Evalute results

We modify rpg_trajectory_evaluation to print mean position error and mean yaw error in the terminal. You can evalute results showed in the paper by

python analyze_trajectory_single.py ../results/boxes_6dof7. Acknowledgements

Thanks for dv_ros and all the open source projects we use.

3

研究背景

对于移动机器人,了解环境和估计自身位置很重要。视觉同时定位和建图(SLAM)和视觉里程计(VO)在许多应用中已被证明具有较高的准确性和鲁棒性。通过增加惯性测量单元(IMU),可以进一步提高VO的精度和鲁棒性,称为视觉惯性里程计(VIO)。然而,非常快的运动或在高动态范围(HDR)照明的场景,对视觉惯性系统仍然造成挑战。

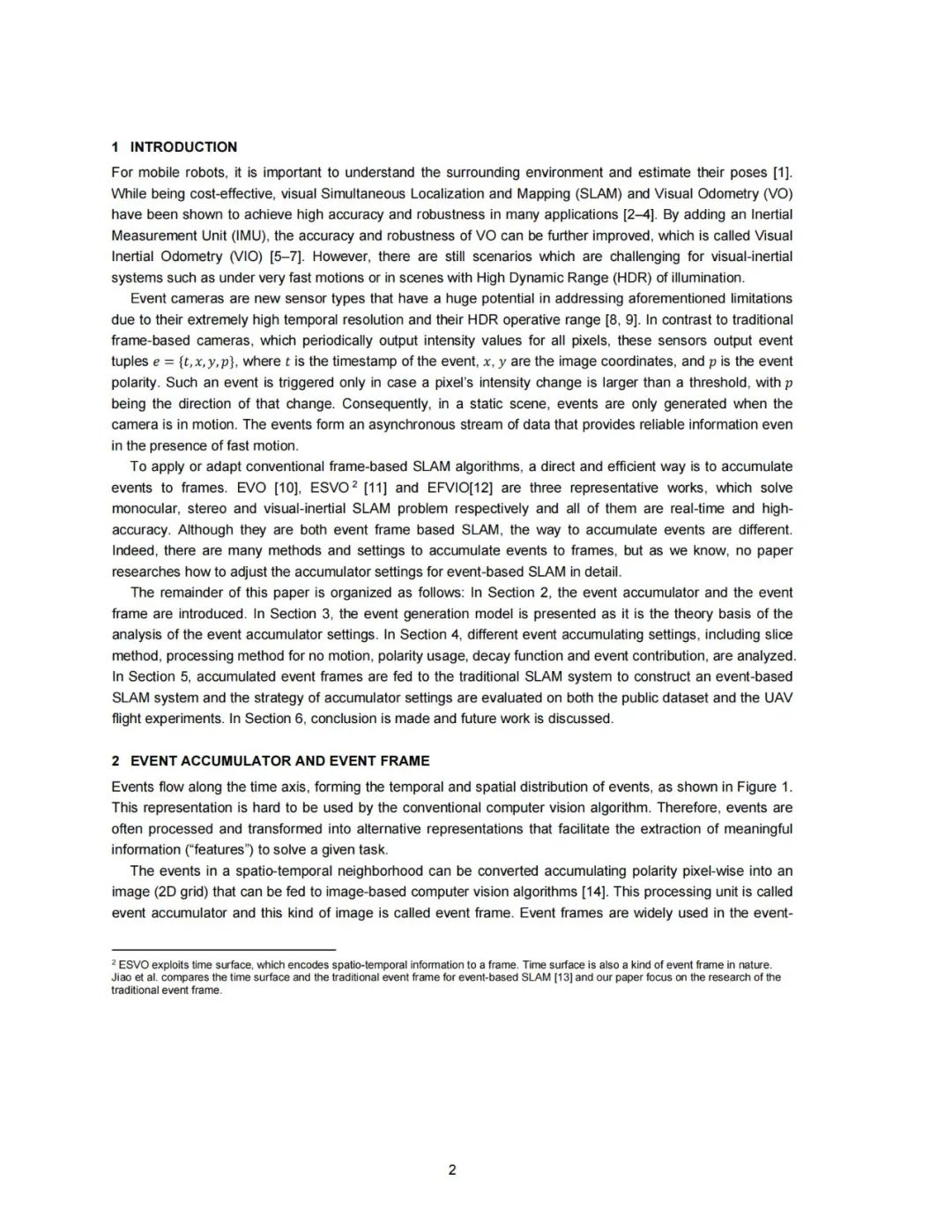

事件相机是一种新型的传感器,由于其极高的时间分辨率和高动态范围,在解决上述限制方面具有巨大的潜力。相比于传统的基于帧的相机周期性地输出所有像素的强度值,事件相机输出事件流,其中事件包括了时间戳、图像坐标与事件极性。只有当一个像素的强度变化大于一个阈值时才会触发这样的事件。因此,在静态场景中,只有在相机运动时才会产生事件。这些事件形成了一个异步的数据流,即使在存在快速运动的情况下,它也能提供可靠的信息。

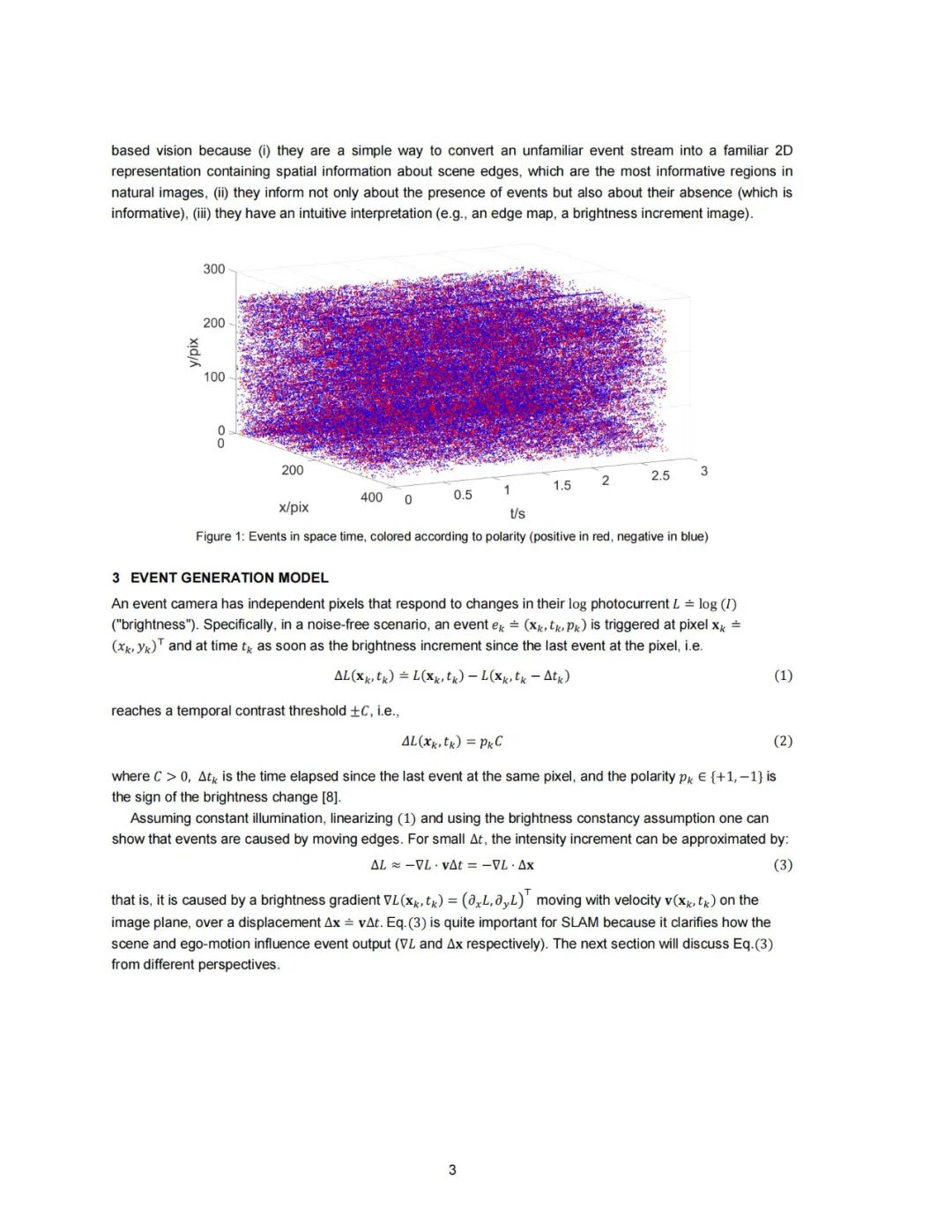

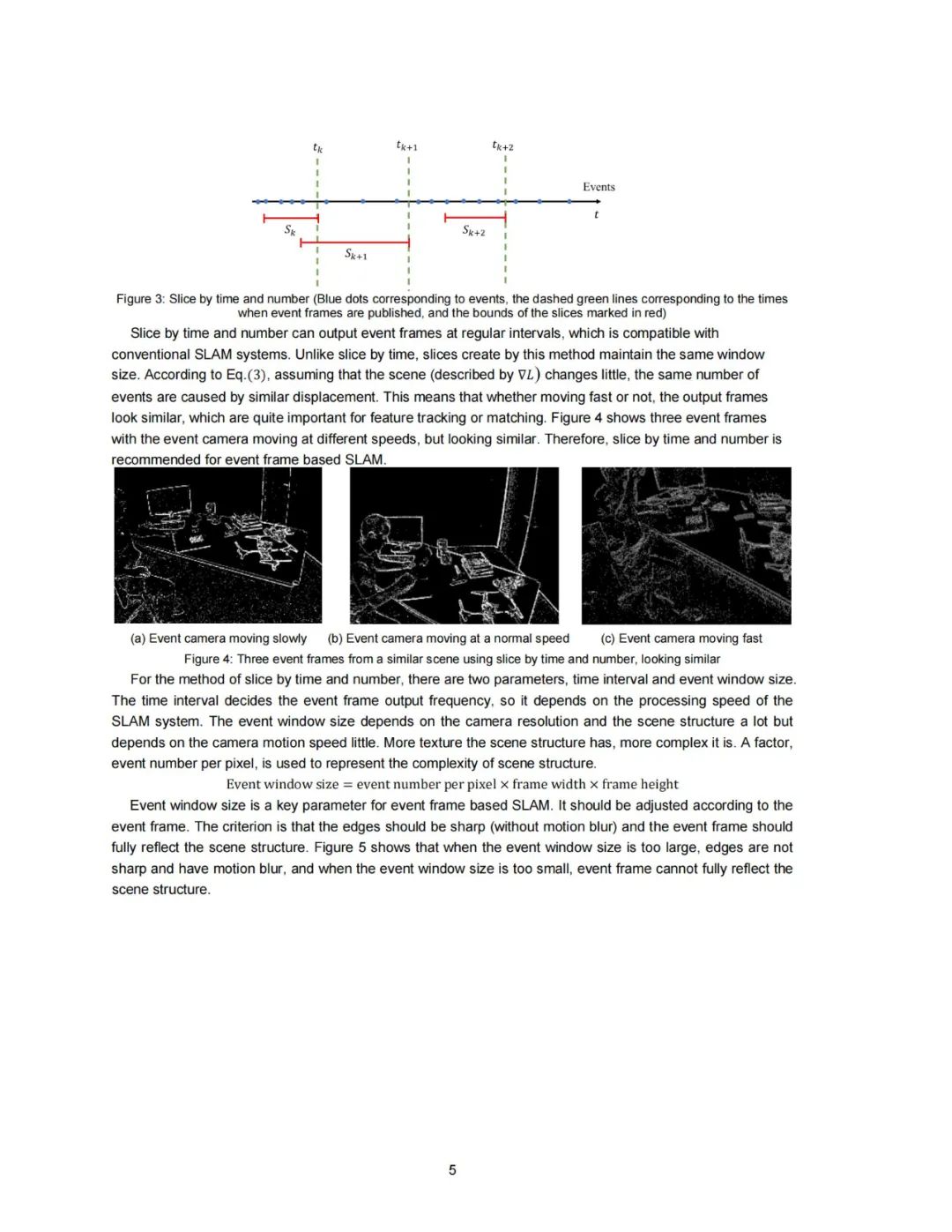

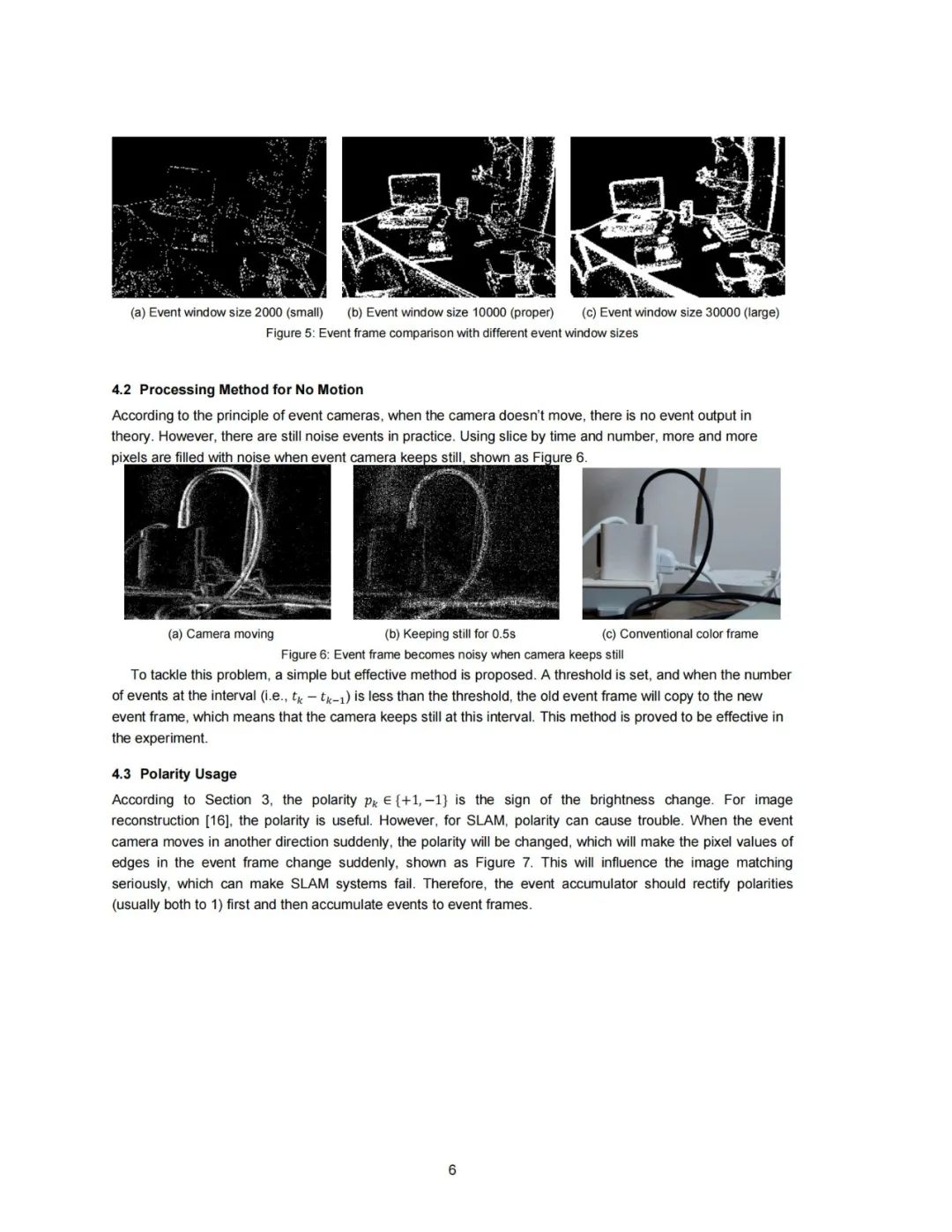

为了应用或适应传统的基于帧的SLAM算法,一种直接和有效的方法是将事件累加到帧中。EVO[10]、ESVO[11]和EFVIO[12]是三个具有代表性的工作,它们分别解决了单目、双目和视觉惯性的SLAM问题,它们都是实时的、高精度的。虽然它们都是基于事件帧的SLAM,但积累事件的方式是不同的。事实上,有许多方法和设置可以将事件累加到帧中,但据我们所知,没有论文系统研究如何调整用于事件相机SLAM的累加器设置。

4

论文工程应用价值

5

灵思创奇设备科研价值

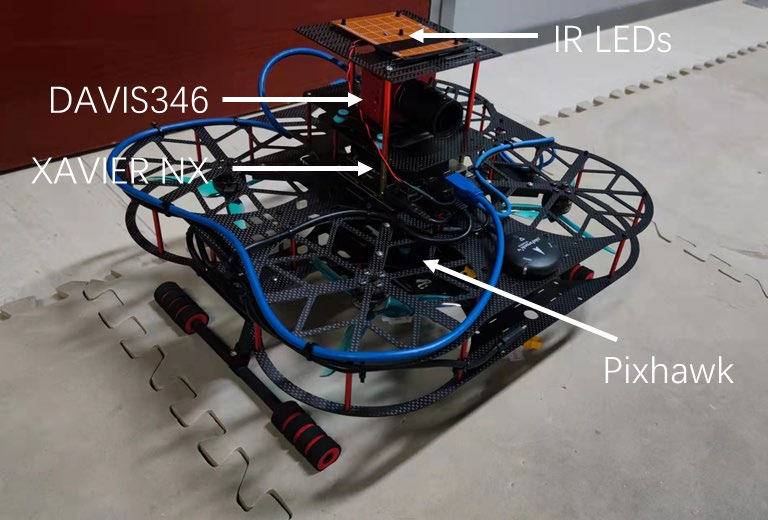

本研究使用了灵思创奇的室内飞行场地、动作捕捉系统以及四旋翼无人机。动作捕捉系统提供了位姿真值,用于测试算法精度;四旋翼无人机搭载飞行控制器Pixhawk与机载电脑Xavier NX,后者用于事件相机SLAM的运算。

论文详情

论文详情

相关产品视频

相关产品视频

相关产品

相关阅读

北京灵思创奇科技有限公司

电话:010-5732 5131

传真:010-5732 5130

网址:www.linkstech.com.cn

微信公众号:LINKS-TECH

地址:北京市昌平区北控宏创科技园17号楼